Face Perception

We have shown that the efficiency with which observers can utilize relevant information from faces changes as a function of learning and orientation. For example, observers are significantly better at discriminating between upright faces than between upside-down faces. In contrast to the standard "configural" vs. "parts" conceptualization, our research suggests that observers use qualitatively similar processes for face perception in all orientations.

However, observers are more efficient at using the relevant information when faces are upright, possibly because observers have different amounts of experience with faces of different orientations.

In a related vein, we have been using converging methods to determine what parts of a face observers use for recognition. Our results, using response classification and image reduction, suggest that observers rely on only relatively localized regions of the face for discrimination, primarily around the eyes and eyebrows. We also have shown that observers do not take advantage of additional, more distributed, information when it is provided. Consistent with these results, recent work has shown that standard measures of configural processing are not related to people's performance on a face identification task.

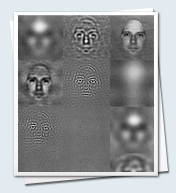

We also are interested in the way in which spatial frequency information is used in face processing, and how that information changes under different conditions (e.g., learning and inversion). Our research has shown that observers are most efficient at using mid-spatial frequencies for face recognition (a result different from that for other objects, such as letters). The frequency tuning for face recognition also does not appear to vary as a function of orientation, lending further support to the idea that upright and inverted face perception may not be based on distinct processes.

In addition to our behavioural work, we are using fMRI and EEG to explore other aspects of face processing. For example, we are investigating the extent of flexibility in the fusiform face area using fMRI, and we have used EEG to show that the visual system does not have a specific foveal bias for face processing when stimuli are scaled appropriately. We also have shown that the face-sensitive N170 component is not related to face discrimination, and may more simply represent face detection.

Finally, in collaboration with Mel Rutherford and Masayoshi Nagai, we are exploring the effects of autism and cross-cultural differences on face processing, and we are also examining the effects of aging on face perception.

Recent sample publications.

Hashemi, A., Pachai, M. V., Bennett, P. J., & Sekuler, A. B. (2019). The role of horizontal facial structure on the N170 and N250. Vision research, 157, 12-23.

Pachai, M. V., Bennett, P. J., & Sekuler, A. B. (2019). The effect of training with inverted faces on the selective use of horizontal structure. Vision research, 157, 24-35.

Creighton, S., Bennett, P., & Sekuler, A. (2017). Contribution of internal noise & efficiency to older adults' face discrimination. Journal of Vision, 17(10), 612-612.

Pachai, M. V., Sekuler, A. B., Bennett, P. J., Schyns, P. G., & Ramon, M. (2017). Personal familiarity enhances sensitivity to horizontal structure during processing of face identity. Journal of vision, 17(6), 5-5.

VCNLab research on Face Perception is funded by NSERC, CIHR, and the Canada Research Chair program.

Document Actions

![[McMaster logo]](mcmaster_logo.jpg)